Disgusted by that name. It’s the most gen-z thing and it’s kinda nonsense. Get off my lawn!

(it’s our lawn lol it’s fine really, you guys keep “vibing”… Does it “fuck”? Is that still a thing? Do things fuck?)

I have spent a little over a week with Gemini Pro writing a program to leverage LMStudio and my two once-high-end gpus that had normal graphics card prices (the kind you expect if you grew up in the 90s – see Have GPU prices stopped being absurd yet?) because no one remembers the 20X0 series. 20GB of vram and modern cuda? Hell yeah let’s go.

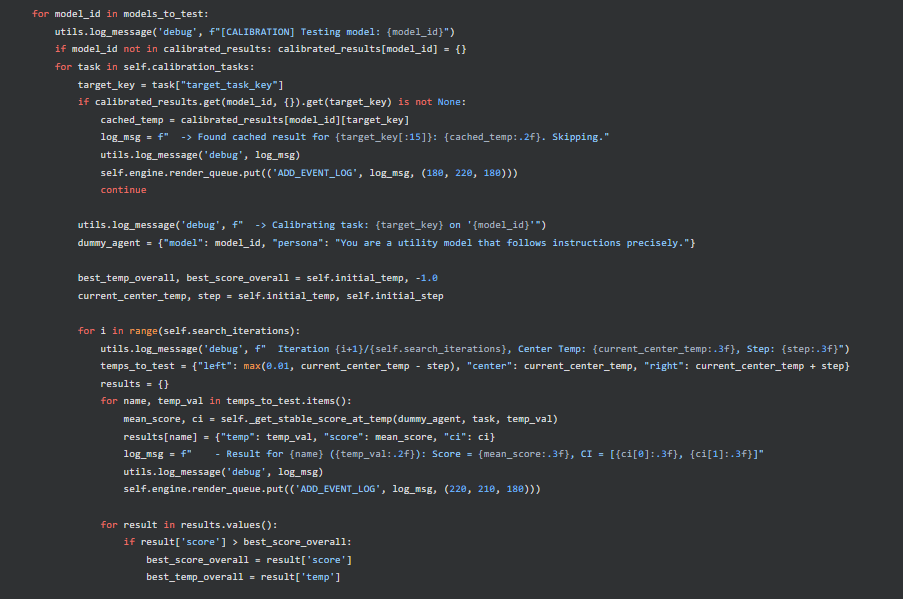

Is it ‘there’ yet? Does it replace software engineers? Absolutely the fuck not. Look at this garbage:

That is an if statement five levels deep inside nested for loops. Disgusting. And the moment you throw that at a fresh context (‘chat’) Gemini shits itself and will randomly drop code or shorten things. Even it doesn’t like it, but you still have to tell it to program like someone that will understand what they’re looking at coming back to it at times (“Break it into helper functions, gemini. Use the wrapper function gemini. Stop using placeholder functions and write the damn code, gemini.”)

It makes silly decisions. You can tell it to fix them and it generally does after a few tries. You will not know they’re silly if you’re not already a programmer that cares about good engineering.

I’m one of those idiots that got heavily into IT before they realized they hate most things about it. The hustle bullshit is psychologically painful to someone that’s experienced abuse. You guys go compete and strangle each other all you want, I’ll be over here playing with dangerous chemicals and following exacting processes to the letter with the kind of hyperawareness that only trauma can bring. So relaxing.

But one of the things I do love is software engineering.

Building things is my jam. Imagining how they can fail and cutting that off at the front with the ‘best’ choice given a bunch of conflicting factors is deeply satisfying to me. I will dive into a deep refactor without blinking when I’m working on something, which most people loathe – systematically listing and binning, setting constraints, separation of concerns, creating giant machines that you think are barely holding together at first glance but which work with precision together.

A lot of the times people talk about AI in scolding terms like “oh it makes bad decisions, you’d have to be an amateur to use it” and you know what… no? It kinda works.

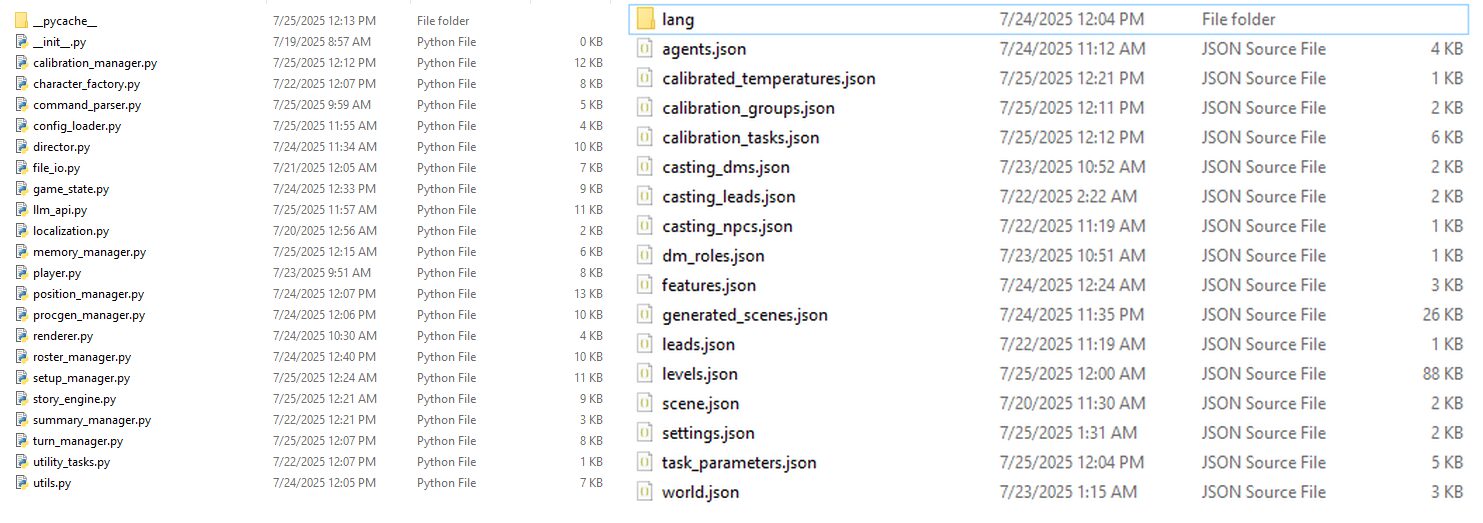

All of this starting from a single file script.

Now, of course, as we all know lines of code = quality. A bigger codebase means better and better means IPO. Anyway, it is impressive it was done in a week for what it does, by any standard.

I will let Gemini summarize because at this point I would be pressed to list all of its features.

This codebase implements an LLM-driven Narrative Builder, a sophisticated framework for generating dynamic, interactive stories using Large Language Models (LLMs). It functions as an automated Dungeon Master (DM) or storyteller, where a cast of AI-controlled characters, each with a unique persona, collaborates to build a narrative in a persistent world.

The application presents the story through a terminal-based, roguelike-style interface using the tcod library. It features procedural generation of scenes and environments, a long-term memory system for characters powered by a vector database, and a meta-AI called “The Director” that actively manages the story’s cast and pacing. A key feature is the ability for a human player to interrupt the AI-driven narrative at any time and take control of a character, directly influencing the story’s direction.

Key Features

-

Multi-Agent Storytelling: The narrative is not generated by a single LLM call but by a “society” of AI agents. These include high-level DMs (Storyteller, Narrator, Tactician), primary characters (Leads), and temporary Non-Player Characters (NPCs). Each agent has a distinct persona and instructions, defined in JSON configuration files.

-

The Director AI: A unique meta-agent (DirectorManager) that observes the story’s progress. At the end of each story “cycle,” it can rewrite a character’s instructions to reflect their development, remove a character from the story, or introduce a new one from a “casting” list to keep the narrative engaging.

-

Procedural Environment Generation (PEG): The system uses an LLM (ProcGenManager) to conversationally generate a map layout. It describes the scene one feature at a time (e.g., “A grand hall opens up before you,” “To the left, a rusty iron gate stands ajar”). These narrative beats are classified and translated into a tile-based 2D map.

-

Vector-Based Long-Term Memory: To combat the limited context windows of LLMs and ensure narrative consistency, the system uses LanceDB and sentence-transformers (MemoryManager). Key events are summarized and stored as vector embeddings, allowing characters to retrieve relevant “memories” from the distant past based on the current situation.

-

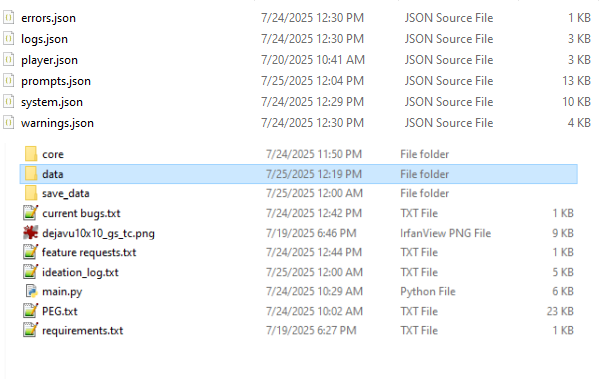

Dynamic Temperature Calibration: The system includes a CalibrationManager that can run tests to find the optimal “temperature” (creativity vs. predictability) for different types of LLM tasks. This improves reliability, using low temperatures for strict tasks like JSON formatting and higher, calibrated temperatures for creative prose.

-

Player Takeover and Interaction: A core design goal is to allow a human player to seamlessly intervene. The player can interrupt the AI story, select a character to control (or create a new one), and input their own actions and dialogue through a UI in the tcod window.

-

Data-Driven and Highly Configurable: Nearly every aspect of the engine—prompts, character personas, AI agent behaviors, system settings, and localization strings—is defined in external JSON files. This makes the system extremely flexible and easy to modify without changing the core Python code.

-

Robust Fallback Mechanisms: The code anticipates LLM failures. For tasks requiring structured output (like JSON), the command_parser employs a multi-step fallback system: it first tries to parse the output, then uses a specialized “fixer” agent (COMMAND_HANDLER) to repair malformed JSON, and finally falls back to simpler methods if necessary. This makes the system resilient to common LLM errors.

Architectural Choices

The codebase is built on several key architectural decisions that prioritize responsiveness, flexibility, and reliability.

-

Dual-Process Architecture: The application is split into two parallel processes to prevent the user interface from freezing during slow LLM API calls.

-

Main Process (main.py): Handles rendering the tcod window, user input, and event handling. It runs a fast, non-blocking loop.

-

Engine Process (story_engine.py): Contains all the heavy game logic, state management, and LLM API calls.

-

Communication: The two processes communicate safely using multiprocessing.Queue. The engine sends rendering updates (GameState) to the main process, and the main process sends user input and interrupt signals back to the engine.

-

-

Stateful Manager Classes: Logic is separated into distinct manager classes, each with a single responsibility. This makes the code modular and easier to maintain. For example, TurnManager handles the logic for a single character’s turn, SetupManager handles all initialization, and ProcGenManager handles level creation.

-

Centralized Data-Driven Configuration: A single Config object (config_loader.py) is instantiated at startup, loading all .json files from the /data directory. This object serves as the single source of truth for configuration, which is then accessed by all other parts of the application. This decouples the engine’s logic from its content.

Process Flow

The program’s execution can be broken down into three main phases: Setup, the Main Loop, and the Director’s Phase.

-

Setup and Initialization (SetupManager):

-

When the application starts, it first determines whether to load a saved game or start a new one.

-

For a new run: It procedurally generates a scene prompt (SceneMakerManager), then uses that prompt as a seed for the Procedural Environment Generation (PEG) process. Characters from the “casting” files are loaded, spawned as entities on the 2D map, and contextually placed within the generated level.

-

The engine determines the lowest token context limit among all its configured LLMs to set a dynamic threshold for when to summarize the story.

-

At the end of setup, the initial GameState is sent to the rendering process.

-

-

Main Story Loop (StoryEngine & TurnManager):

-

The engine runs in cycles. Each cycle begins by checking if the dialogue log has grown too long; if so, it uses the SummaryManager to condense the recent dialogue into a new summary.

-

A shuffled list of all characters (DMs, Leads, NPCs) is created for the turn order.

-

The TurnManager executes a turn for each character.

-

AI Turn: It builds a prompt containing the latest summary, relevant long-term memories, and recent dialogue. It calls the LLM, processes the response, and updates the game state. If the character is on the map, the response is parsed for movement intent (PositionManager) and the entity’s coordinates are updated.

-

Player Turn: If a character is player-controlled, the PlayerInterface requests input from the user for actions and movement.

-

-

After each turn, the updated GameState is sent to the rendering process so the user can see the results immediately.

-

-

Director’s Phase (DirectorManager):

-

After all characters have had a turn, the cycle ends with the Director’s Phase.

-

The Director AI first decides if any temporary NPCs are no longer relevant and should be removed from the story.

-

It then randomly selects one “essential” character (a Lead or a DM) to review.

-

It analyzes the story context and issues a command: UPDATE the character’s instructions, REMOVE the character and replace them, or LOAD a new character from the casting files.

-

This action is executed, altering the cast for the next cycle. This completes the loop, and the next cycle begins.

-

Yeah… So… In a week, in conversational terms with minor edits, it made this.

I’m not here to tell you that AI is good for the world. I just don’t think it’s particularly helpful to address things with false sentiments. And it’s unequivocably false that AI is bad or useless for programmers.

It understood me when I asked it to use confidence values and intervals for calibration of model temperatures per agent task. The process works, it runs five tasks repeatedly until it finds a good value that works consistently for output from a model. I tutored people in chemistry who probably have jobs now that could not do the algebra for this, let alone understand what they were doing with statistics in our chemical quantitative analysis course.

It understood me when I said that there was an architectural issue when it was repeatedly playing whack-a-mole fighting the same bug over and over, and touching every single file in the codebase to make what should be minor changes. It did the refactoring for this with a handful of trivial bugs that I could have fixed myself, but which it usually fixed competently on its own when given error logs. Sometimes it would make very silly, overly complicated fixes that I had to correct it on, but it fixed them.

It got me when I told it that it was using stupid IT jargon for the game logs that were off-putting and immersion breaking and rewrote them to not hurt your eyes so much with ====== SYSTEM ERROR BEEP BOOP CRITICAL FAILURE in procgen_manager.py COULD NOT FIND BUTT ======, in pretty much the same terms. I could use humor to communicate with it.

So what now?

Am I a convert? no… I don’t like giving google money. I do think people should have and use local models, I am an AI fan and have been since way, way before the current explosion in interest all the way back to the 90s and aughts wide-eyed reading sci-fi and toying with markov chain bots (heh jfc I’m an AI hipster) but the explosion in multinationals training things that can understand humor better than your average big bang theory viewer scares me, especially given recent developments in politics. I don’t want the drone hunting me down from a database entry in my medicaid records to be able to understand irony, that’s absolutely fucked.

Most of all, what I am saying is you are undermining your argument and leaving people unprepared for that if you try to minimize the capabilities of these systems. They are powerful. For $20, a failed career, and a weekend indoors they can make you what you wanted to build but never had the free time to.

No one is served by telling people already doing that and seeing its capabilities that AI is a gimmick. You need to go out and make sure that you’re current with this technology if you’re positioning yourself to debate about it – I was not. Focus on the real problems, don’t worry about the sociopaths that don’t care about the real problems, they’re louder than the rest of the people you might convince and more likely to be easy to bump heads with in an argument, but they are few.